Introduction

Here, I am writing an example of halo exchange for message passing interface (MPI). This technique is usually used to decompose a big matrix or computational domain into smaller blocks. Halo exchange is usual part of high performance math and CFD solvers. The example here deals with a halo of arbitrary thickness 1, 2, 3,… and transfers corner nodes. The blocks can be rectangles and sparse in space.

The code

The code for the halo exchange including the examples is on GitHub here.

Domain and Block

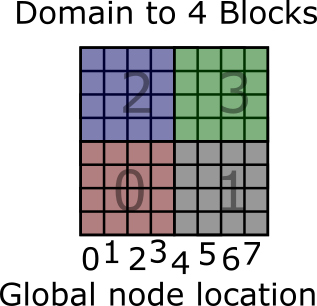

Imagine we have an 8x8 domain (in real life 10s, 100s millions of nodes) where each node contains some data.

Domain is divided into 4 blocks (or partitions) where each block is run by a processor. We separate each block to be like

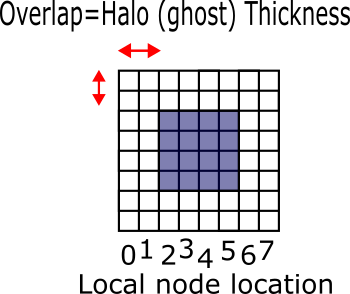

In this example, halo has thickness of 2 nodes (or cells). The halo nodes are also called ghost nodes because they are not the actual node but representative of nodes from other blocks.

Exchange Sketch

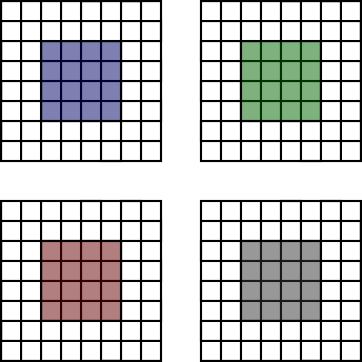

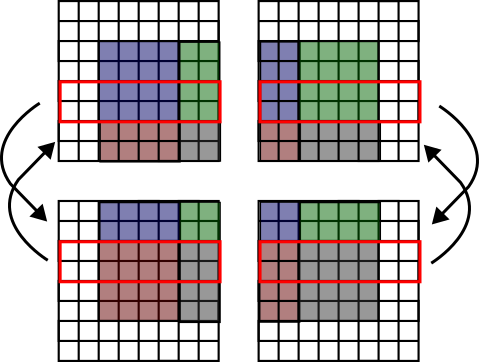

To exchange halo, first we have four block like this:

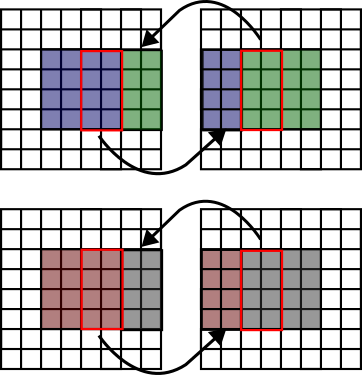

The first communication happens along x-axis

The second communcation happens along y-axis. Note that here the communicated boundary extended to ghosts, this is necessary to have corners transfered correctly:

That’s it. If you look at closely the ghosts are correctly representing their actual data.

The order of communications, first x-axis then y-axis, is necessary to get corners transfered. If you are not interested in the corners, the order is not important.

Axis and Directions

I define some array functions (arrays that look like functions):

enum Dir{right, top, left, bottom};

int oppositeDir[4]{left, bottom, right,top};

enum Axis{x,y};

Axis DirToAxis[4]{x,y,x,y};

Axis GetNormalAxis[2]{y,x};

std::array<Dir,2> GetDirsOfAxis[2] {{left,right},{bottom, top}};

Note enum values start from zero (right=0, top=1,…) and are suitable to be array index. So

opposite[right] is left

DirToAxis[right] is x

...

These are very efficient arrays that look like functions. It is better that enums to be in a namespace, but here I am just presenting the rough idea. Moreover, it’s good to know that scoped enums (enum class) cannot be used as array index that’s why I don’t use them here.

Box code

Here we need to write a small geometry code for a box which can be a whole block or a section of a block. To define a box, we only need information for bottom-left (low) and top-right (high) corners:

struct Box{

std::array<int,2> low;

std::array<int,2> high;

// constructors and ....

}

One good handy function for Box is shrinking or enlarging along x/y axis:

Box Shrink(int thickness, Axis axis){/*some code */}

And define a shrink which does the previous function along both x and y axes:

Box Shrink(int thickness){/* some code*/};

Therefore, if we have a block, we can easily shrink it with ghost thickness to get local domain box.

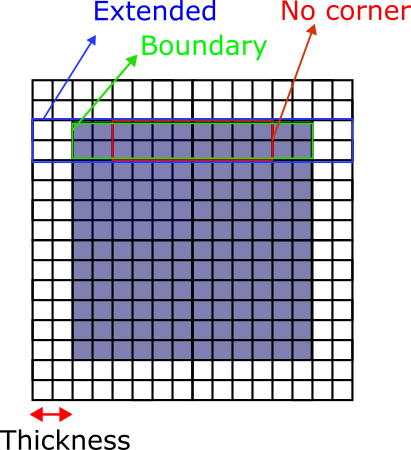

To make things even easier for later on, I defined three functions which give

Box GetBoundary(Dir dir, int thickness){/*some code*/}

Box GetNoCornerBoundary(Dir dir, int thickness){/*some code*/ }

Box GetExtendedBoundary(Dir dir, int thickness){/*some code*/}

For the below purple box they produce the shown boundary boxes for top direction and thickness=2:

Now, before going forward, have a look at the exchange section and the functions we defined here. The functions make it very easy to select different length boundaries at different directions.

Block code

A block stores

- information for each node, here is just a

doublenumber. The code is flexible to accommodate custom types to be node’s information, - dimensions (width and height),

- overlap or ghost/halo thickness

- its neighbouring blocks which are computed by other processors

- MPI data types (subarrays) for boundaries. They make transfer of boundaries a piece of cake.

struct Block{

std::vector<double> nodes;

std::array<int,2> dims;

int overlap = 1;

std::vector<std::tuple<int,Dir>> neighbours;

MPI_Datatype boundaryMpiType[4];

MPI_Datatype ghostMpiType[4];

// rest ...

}

We write a few functions to access nodes with x and y indexes:

auto cartToIndex(int ix, int iy){

return iy + ix*dims[1];

}

auto& Get(std::integral auto... ix){

return nodes[cartToIndex(ix...)];

}

A few points about development here:

Getfunction can be replaced with()operator,Getis written with variadic parameters, to show the code can be easily expanded to 3D block. Read more on variadic templates here.

We need a function that creates MPI subarray type for a given box (boundary)

auto SetBoundaryMpiType(const Box& box, MPI_Datatype& type){/* code */}

Now create MPI types for boundaries

auto CreateAllBoundaryArrays(){

auto domain = GetDomainBox();

SetBoundaryMpiType(domain.GetBoundary(Dir::right,overlap), boundaryMpiType[Dir::right]);

SetBoundaryMpiType(domain.GetExtendedBoundary(Dir::top,overlap), boundaryMpiType[Dir::top]);

// code for left and bottom boundaries

auto ghost = GetGhostBox();

SetBoundaryMpiType(ghost.GetNoCornerBoundary(Dir::right,overlap), ghostMpiType[Dir::right]);

SetBoundaryMpiType(ghost.GetBoundary(Dir::top,overlap), ghostMpiType[Dir::top]);

// code for left and bottom ghost boundaries

}

Imagine we have two blocks like this:

the beauty of using subarrays for boundary transfers is that sending the subarry of right boundary of block 0 sits nicely on subarray of left boundary of block 1. It is like copying a slice of a block and paste it somewhere desired in another block. The blocks don’t need to be the same size but subarrays must be so. Note that, during this copy-paste the selected slice is not mirrored i.e. the order of slice nodes in space is preserved which is desirable in the halo exchange.

In MPI send-receive functions, tags of directions are important when there are periodic conditions and we have only two blocks. A block sends its left and right boundaries to another block. Tags help to identify which one is which.

The setup is finished, now the plan is to code the exchange section. The lowest level of communication is between on side of current block with a neighbour on the same side:

auto communicate(Dir dir, int dest){

MPI_Request sendRequest, recvRequest;

MPI_Irecv(&nodes[0], 1 , ghostMpiType[dir], dest, oppositeDir[dir] , MPI_COMM_WORLD, &recvRequest)

// similiar Isend code...

return std::array<MPI_Request,2>{sendRequest, recvRequest};

}

We want the communcations happen along x then y axis in order:

void communicate(Axis axis)

{

for (auto &neighbour : neighbours)

{

const auto &[cpuId, dir] = neighbour;

if (DirToAxis[dir] == axis)

{

auto [sendReq, recvReq] = communicate(dir, cpuId);

sendRequests.push_back(sendReq);

recvRequests.push_back(recvReq);

}

}

for (auto &r : recvRequests)

{

MPI_Status s;

MPI_Wait(&r, &s);

}

recvRequests.clear();

if (axis == Axis::y)

{

for (auto &r : sendRequests)

{

MPI_Status s;

MPI_Wait(&r, &s);

}

sendRequests.clear();

}

}

Some points about the above code:

- x-axis communication is called first, then y-axis,

- when a block receives x-axis messages, we are good to run y-axis communications,

- so we wait only for receiving messages (not sending) along x-axis,

- but at the end of y-axis communications, we wait for receiving messages and all sending messages including the x-axis ones.

- waiting for both sending and receiving messages, at end, ensures the data in the halo and local domain of a block is not used by MPI anymore and we can modify them.

- find out about different modes MPI send here.

- blocks in space can be sparse i.e. a block can have 0, 1, 2, 3 or 4 neighbouring blocks.

And finally, high level communication happens like:

void communicate(){

communicate(Axis::x);

communicate(Axis::y);

}

Example 1

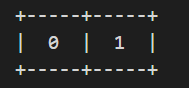

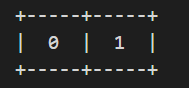

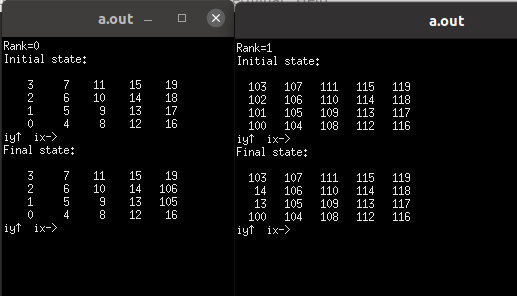

The first example (or test) in the code is about two horizontally neighbouring blocks of 5x4 nodes which send-and-receive the shared boundary:

The halo thickness is 1. Each block is filled with unique numbers. The initial state and the state after the communications are shown in this picture:

To see outcome of each process in different terminal, run the program with this

mpirun -np 2 xterm -hold -e ./executable_file

Example 2

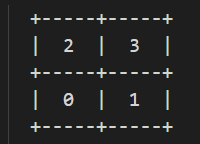

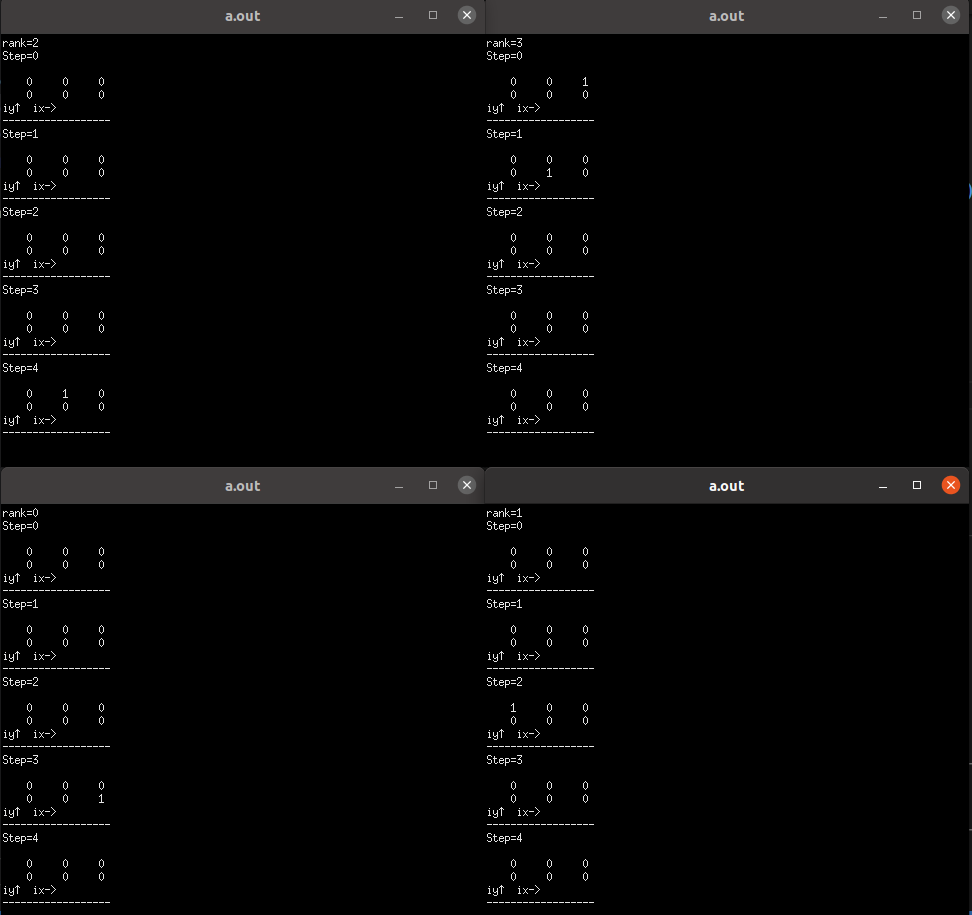

This example in the code, is about 4 periodic blocks where each has the size of 5x4 including halo (thickness=1) and 3x2 without halo. The blocks {0,1,2,3} are place in space like:

All the nodes of blocks are filled with zero except top corner of block 3 which contains 1. After each communication, we move the data in all nodes one node lower and one node to the left, in other words:

node[i-1][j-1] = node[i][j]

Therefore, after each step we see our special data, 1, is moving diagonally in a block and when crossing the boundary, jumps into another block. The results is shown in the picture below without showing ghost nodes:

Example 3

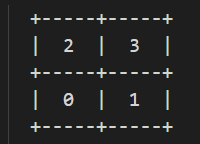

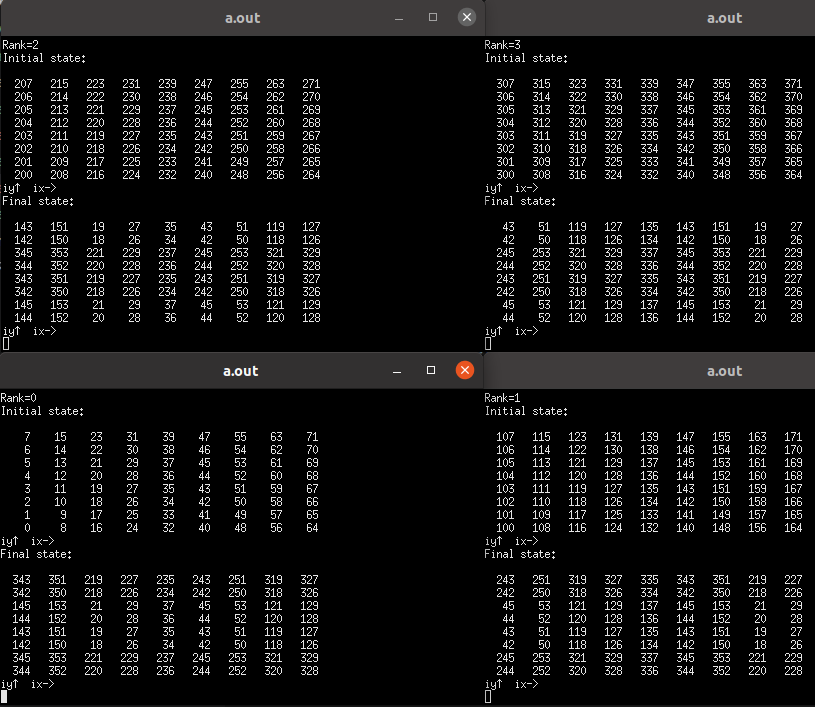

Here I chose bigger 9x8 blocks to have halo thichkness of 2. Four blocks are having periodic conditions and placed in space like:

Blocks are filled with unique numbers, so the halo exchange can be accurately inspected. The initial and final state of the blocks are shown in the picture below:

Improvement

The code is written in a rough way, for the sake of brevity and education. It can be improved by

inttypes for sizes are replaced withsize_t,- changing

BoxandBlockstructs to classes and separate private and public members, - creating proper constructors,

- the information on each node is just a

double, this can be generalized with a generic type and adding intrinsic and custom MPI types (see MPI custom type creation), - here, I used x-axis then y-axis communications to transfer corners. Alternatively, we could transfer corners directly. This change can increase number of communications but the code wouldn’t need x-y communication order,

- it would be nicer to replace

Getfunction ofBlockwith()operator, - Enums should be in their own namespace to not pollute the code,

- the code can be upgraded to 3D. Box class will be upgraded to 3D system. The communication order will be x, y and then z.

Latest Posts

- A C++ MPI code for 2D unstructured halo exchange

- Essential bash customizations: prompt, ls, aliases, and history date

- Script to copy a directory path in memory in Bash terminal

- What is the difference between .bashrc, .bash_profile, and .profile?

- A C++ MPI code for 2D arbitrary-thickness halo exchange with sparse blocks