Introduction

Slurm is an open-source application for job scheduling on Linux machines, especially cluster systems. Here, I show an example of submitting a sample MPI-OpenMP job on a cluster system. I tested the code on Cirrus, a supercomputer in the UK.

Prerequisites

Here, I assume you generally are familiar with the concept of Message Passing Interface (MPI) and OpenMP.

C++ Code

The code we want to run on the cluster is simple. We ask every thread to write their program id, MPI rank, OpenMP thread id, node name and so on. The program asks for an argument. This is to mock situations that we want to run a program multiple times with different inputs.

To get the node name, I use SLURMD_NODENAME environment variable set by Slurm.

#include <iostream>

#include <mpi.h>

#include <thread>

#include <time.h>

#include <iomanip>

#include <omp.h>

int main(int argc, char *argv[])

{

MPI_Init(NULL, NULL);

int rank;

int size;

MPI_Comm_rank(MPI_COMM_WORLD, &rank);

MPI_Comm_size(MPI_COMM_WORLD, &size);

const char* env_p = std::getenv("SLURMD_NODENAME");

std::time_t t = std::time(nullptr);

std::tm tm = *std::localtime(&t);

#pragma omp parallel

{

std::stringstream stream;

stream << "program:"<< argv[1]

<< " Node name: " << env_p

<< " MPI rank:"<< rank <<" MPI size:"<< size

<< " thread:" << omp_get_thread_num()

<< " max Threads:" << omp_get_max_threads()

<< " time:"<< std::put_time(&tm, "%H:%M:%S") <<'\n';

std::cout << stream.str();

}

std::this_thread::sleep_for(std::chrono::seconds(10));

MPI_Finalize();

}

Slurm terms

- Node: a computer that is a part of a cluster

- Task: a process like an MPI process. A serial program is one task.

- CPU: Generally means a CPU core but its definition can be changed to a CPU socket or thread.

- Job: a request to run a program.

Submission Script

- Each node on Cirrus has 36 cores.

- I want to run the program 4 times with 4 different inputs.

- I use 2 nodes, so, 2 programs on each node.

- Each program uses 6 MPI processes (12 per node).

- Each process uses 3 threads

- Therefore, each run uses 18 cores.

To submit a job we need a submission script like this:

#SBATCH --job-name=Example_Job

# maximum duration of the job, format hh:mm:ss

#SBATCH --time=00:01:0

# Do not share nodes with other users

#SBATCH --exclusive

# total number of tasks (processes)

#SBATCH --ntasks=24

# number of nodes

#SBATCH --nodes=2

# number of tasks per node

#SBATCH --tasks-per-node=12

# number of OpenMP threads for each task

#SBATCH --cpus-per-task=3

# Your supercomputer admin gives you this

#SBATCH --account=YourAccountCode

# Cirrus "standard" partition is for running on CPU nodes

#SBATCH --partition=standard

#SBATCH --qos=standard

# Load the default HPE MPI environment

module load mpt

# Change to the submission directory

cd "$SLURM_SUBMIT_DIR"

# Set the number of OpenMP threads

export OMP_NUM_THREADS=3

module load openmpi

# Submitting 4 jobs at the same time

for job in $(seq 0 3)

do

srun --ntasks=6 --nodes=1 ./a.out ${job} &

done

wait

Note that #BATCH lines are not comments. But the other lines starting with # are comments.

srun has its own ntasks and nodes allocation, otherwise each srun in the loop uses the headers allocations i.e. all the resources.

If you want to submit one program, drop the loop and say

srun ./YourProgramExecutable

And it will use all the resources specified in the header.

For MPI-only program without OpenMP set

#SBATCH --cpus-per-task=1

export OMP_NUM_THREADS=1

Slurm commands

You submit a script with

sbatch YourScriptFile

This puts your script in a queue till enough resources are freed for you. The command also shows the id of the submitted job.

You can cancel a submitted job via

scancel IdOfJob

You can see your queued or running jobs with

squeue -u YourUserNameOnCluster

You get something like this

JOBID PARTITION NAME USER ST TIME NODES NODELIST(REASON)

220131 standard Example_ UserName PD 0:00 2 (Priority)

STcan be PD (pending), R (running), CG (completing) and some more.- Time shows how long it has been running.

If the job is completed squeue won’t show anything.

To get information about the allocation of resources to a task we can use:

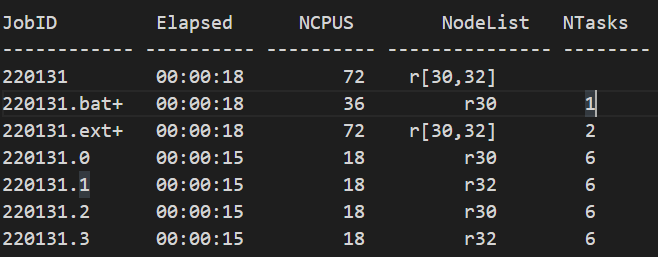

sacct -j 220131 --format=JobID,Start,End,Elapsed,NCPUS,NodeList,NTasks

For the above tasks I got:

Here, you see number of CPUS referes to number of cores each program used.

To show more info from sacct, have a look at its manual in a terminal

man sacct

The default stdout and stderr of a slurm job is written in a file with the format of

slurm-JobId.out

Some notes

The way I distibuted threads and processes in this example was not the most efficient way. For a hybrid MPI-OpenMP program, it’s better to assign one MPI process to each node and assign all the cores there to OpenMp threads. However, the example is designed to show flexebility of Slurm in managing complex jobs.

More Info

This post is to make you start quickly with Slurm. There are a lot more in Slurm, its documentation is very thorough which can be used as a dictionary.

To work with a supercomputer, it’s always good to ask its system admin to send you a sample script tailored to that machine.

Latest Posts

- A C++ MPI code for 2D unstructured halo exchange

- Essential bash customizations: prompt, ls, aliases, and history date

- Script to copy a directory path in memory in Bash terminal

- What is the difference between .bashrc, .bash_profile, and .profile?

- A C++ MPI code for 2D arbitrary-thickness halo exchange with sparse blocks